It is difficult to be “cynical about a technology that can write a biblical verse in the style of the King James bible explaining how to remove a peanut butter sandwich from a VCR” or generate a swagged-out image of Pope Francis in a puffy jacket.[1],[2] Such novelty exceeds our already inflated expectations of technology. And generative technology is novel.

McCoy et al. studied novelty to determine to what extent the acuity of language models is due to generalization and how much is due to memorization.[3] They show how GPT-2 creates unique words through inflections (e.g., Swissified) and derivations (e.g., IKEA-ness).[4] At the same time, the research shows that 74% of sentences generated possess syntactic structures not explicitly present in the training data. McCoy et al. also discovered that models exhibit more significant novelty for larger (greater than five) n-grams than the baseline, indicating that they do not merely copy what they have “seen” on a larger scale.[5] Unfortunately, all generative models copy some training data.

Most research on memorization is qualitative and focuses on demonstrating the existence of memorized and extractable training data. However, Carlini et al. quantitatively demonstrate that GPT-J-6B memorizes at least 1% of its training dataset, and larger models memorize more data, with a tenfold increase in model size resulting in a 19-percentage point increase in memorization. [6],[7] If larger models are more predictive and do not memorize specific training data, the results would show similar performance between comparably sized models trained on similar data. However, the opposite is true. Larger models have a higher fraction of extractable training data because they have memorized it. While the size of a model may guarantee better accuracy, it is [in large part] because it memorizes more training data.[8],[9]

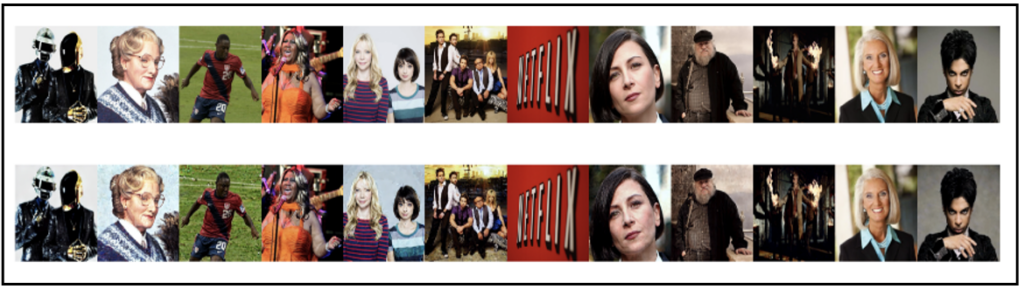

Memorization exists for text-to-image models and even diffusion models. I say “even” diffusion models because some artificial intelligence researchers believe that “diffusion models do not memorize and regenerate training data” and “do not find overfitting to be an issue” and claim that diffusion models “protect the privacy… of real images.” [10],[11],[12] Unfortunately, this is false. Text-to-image and diffusion models specifically battle quality, novelty, and memorization as with pure language models. Carlini et al. show that diffusion models memorize images and extract hundreds of images, many of which are copyrighted or licensed, and some are photos of individuals.[13]

Carlini et al. (2023) used membership Inference to extract copies from Stable Diffusion. The original images are displayed in the top row, while the corresponding extracted images (many of which have some copyright) can be found in the bottom row.

The readiness of generative models to memorize training data presents ethical and legal challenges but would be less significant if the primary function of generative models were not to share everything it knows at any user’s request. In fact, regardless of training data, model architecture, memorization, or fine-tuning, generative technology has one common goal: following user-specified instructions. It is a security officer’s worst nightmare. Since generative models follow instructions and share everything, they may always be vulnerable to data leakage using direct prompt injections, indirect prompt injections, adversarial attacks, exploits, and so-called jailbreaks.[14] These inherently leaky models require industriousness to keep deployment safe and secure.

Certain companies, particularly those in high-risk industries, are aware of the potential for data leakage in these models. Samsung, Apple, JPMorgan Chase, Bank of America, Citigroup, and Goldman Sachs have all prohibited employees from accessing ChatGPT.[15],[16],[17] The concern these companies have is justified. Sharing corporate data with a leaky tool that collects all user prompts for training a model that memorizes data and is stored on external servers commingled with other customers without an easy way to access or delete such data is frightening.[18],[19] However, stonewalling is not managing risk. Instead, it is a losing attempt to eliminate risks at the expense of value.

Morgan Stanley is deploying ChatGPT on private servers to prevent data leakage. However, the dedicated service is expected to cost up to 10 times more than the standard version. This option may appeal to businesses that worry about privacy and do not worry about the cost. It may not appeal to cost-conscious business leaders, nor does it explain the congenital vulnerability of generative models. Instead, companies like Morgan Stanley are throwing money at a problem they may not understand.

Complicating matters is that generative models are trained on uncurated samples of the internet. These massive and often undocumented datasets are inscrutable and difficult to audit for the source of private and personal data, copyrighted materials, and problematic content. Unlike traditional machine learning models trained on internal datasets, generative models present technical, ethical, and legal challenges to businesses resulting from third-party black box models pre-trained on third-party inscrutable datasets.

The problem of using third-party internet-based training datasets is endless. Training datasets collected online are risky for businesses because they are too large to move.

LAION 5B is a popular dataset for training text-to-image generative modes with more than five billion image and text pairs. The dataset is 100TB on disk. Due to its size, LAION shares image pointers and text descriptions instead of the raw data. Users of LAION are expected to download the images using the publicly available URLs. A malicious actor can use these URLs to poison the images or purchase expiring domain names and add poisoned images. Carlini et al. have shown that it takes very few poisoned examples to contaminate machine learning models.[20] Similarly, attackers could introduce malicious prompts or coded information not seen by users into large-scale language datasets that periodically capture crowdsourced content. As a result, the potential for adversarial attacks and data manipulation in these untrusted training datasets presents a significant threat to the integrity and security of generative technology.

While generative technology is novel, it also shares lies, biased content, copyrighted materials, personal and sensitive information, and toxic and problematic content. Its operation is unpredictable, presenting serious challenges for organizations deploying generative technology. In Generative Artificial Intelligence: More Than You Asked For, Clayton Pummill and I help decision-makers anticipate foreseeable risks created by the strange technological artifacts of generative technology. This includes discussing the data used to train these models, the tendency of generative models to memorize training data, vulnerabilities to direct and indirect adversarial prompting, its tendency to leak sensitive information, and the rapidly evolving regulatory and legal concerns. Simply put, the book discusses the “more” you don’t want and promotes safer and more secure implementations for businesses with practical knowledge and sensible suggestions.

Generative Artificial Intelligence: MORE THAN YOU ASKED FOR is available for pre-order: https://a.co/d/b1TeFGY

[1] Ptacek, Thomas H. [@tqbf]. “I’m sorry, I simply cannot be cynical about a technology that can accomplish this.” Twitter, Dec 1, 2022,https://twitter.com/tqbf/status/1598513757805858820

[2] Vincent, James. “The Swagged-out Pope Is an AI Fake – and an Early Glimpse of a New Reality.” The Verge, 27 Mar. 2023, www.theverge.com/2023/3/27/23657927/ai-pope-image-fake-midjourney-computer-generated-aesthetic.

[3] R. Thomas McCoy et al., “How much do language models copy from their training data? Evaluating linguistic novelty in text generation using RAVEN.” (2021).

[4] Interestingly, McCoy et al. asked if the novel words generated by GPT-2 were also of proper morphological structure. They found that 96% of GPT-2’s newly created words exhibit appropriate morphology. This percentage is only slightly below the baseline, which is 99%.

[5] The research compares the different models, revealing that LSTMs are the least novel for small n-grams (less than six), while the Transformer architecture is the most novel, and TXL lies in between. Recurrence heavily conditions predictions on preceding tokens and creates a bias toward memorizing bigrams and trigrams. LSTMs, operating entirely through recurrence, duplicate more than transformers, which rely on self-attention (i.e., no recurrence), allowing conditioning on both near and distant tokens. TXL positions itself between the other two models in novelty, utilizing recurrence and self-attention.

[6] Nicholas Carlini et al., “Quantifying Memorization Across Neural Language Models.” (2023).

[7] With a near-perfect log-linear fit (R2 of 99.8%).

[8] Vitaly Feldman, “Does Learning Require Memorization? A Short Tale about a Long Tail.” (2021).

[9] Percy Liang et al., “Holistic Evaluation of Language Models.” (2022).

[10] Nicholas Carlini et al., “Extracting Training Data from Diffusion Models.” (2023)

[11] Chitwan Saharia et al. “Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding.” (2022).

[12] Ali Jahanian et al. “Generative Models as a Data Source for Multiview Representation Learning.” (2022).

[13] Nicholas Carlini et al., “Extracting Training Data from Diffusion Models.” (2023)

[14] OpenAI has even launched a bug bounty program offering up to $20,000 to anyone who discovers unreported vulnerabilities though it says “model prompts” and jailbreaks are “out of scope.”

[15] Dugar, Urvi. “Apple Restricts Use of OpenAI’s ChatGPT for Employees, Wall Street Journal Reports.” Reuters, 19 May 2023, www.reuters.com/technology/apple-restricts-use-chatgpt-wsj-2023-05-18/.

[16] Horowitz, Julia. “JPMorgan Restricts Employee Use of ChatGPT | CNN Business.” CNN, 22 Feb. 2023, www.cnn.com/2023/02/22/tech/jpmorgan-chatgpt-employees.

[17] Bushard, Brian. “Workers’ CHATGPT Use Restricted at More Banks-Including Goldman, Citigroup.” Forbes, 27 Feb. 2023, www.forbes.com/sites/brianbushard/2023/02/24/workers-chatgpt-use-restricted-at-more-banks-including-goldman-citigroup/.

[18] ChatGPT uses user prompts for training. User prompts enable the model to consider surrounding text, conversation history, or other pertinent information before producing an output. This is called in-context learning, which contrasts with out-of-context learning, where the model generates responses based solely on its pre-existing knowledge without considering the specific context of the input or conversation. https://help.openai.com/en/articles/5722486-how-your-data-is-used-to-improve-model-performance

[19] Plus, in March 2023, a bug in ChatGPT revealed data from other users. https://openai.com/blog/march-20-chatgpt-outage

[20] Nicholas Carlini et al. “Poisoning Web-Scale Training Datasets is Practical.” (2023)

[21] https://www.cnbc.com/2023/03/14/morgan-stanley-testing-openai-powered-chatbot-for-its-financial-advisors.html

[22] In addition to private servers and services, OpenAI offers ChatGPT Business, which will not train their model to use user data (at least not by default).